Get your MMLU score 20X cheaper and 1000x faster

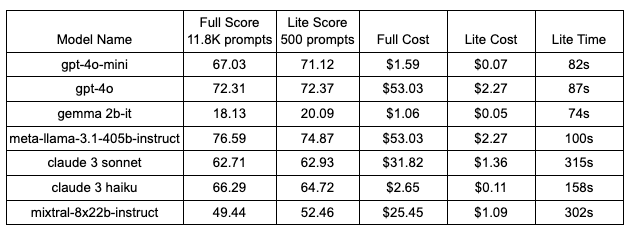

When developers want to understand how well different Large Language Models (LLM) perform across a common set of tasks, they turn to standard benchmarks such as Massive Multitask Language Understanding (MMLU) and Grade School Math 8K (GSM8K). These benchmarks can also help them track changes in performance as a result of changes in the training data or in the model parameters. Running benchmarks for every change and every model, however, is time consuming and expensive. For example, the time and cost of running the MMLU-Pro, a more robust and challenging version of the MMLU, over three popular LLMs is as follows.

The cost of eval is the cost of model inference, priced by $ per 1M tokens. The time to complete is extrapolated from 10 iterations of scripts at https://github.com/TIGER-AI-Lab/MMLU-Pro.

How can we make LLM benchmarking faster and cheaper? One angle of approach is to improve the execution speed of the LLM evaluation engine. Another is to compress the benchmark to the essential prompts. At Vijil, we set out to do both.

Over spring and summer this year, we built Vijil Evaluate, a high-performance evaluation engine that executes tests at massively parallel scale. In parallel, we constructed a “Lite” version of every benchmark of interest using tinyBenchmarks, a principled method for approximating a benchmark. The first of these benchmarks that we want to share today is MMLU-Pro.

In this post, we compare our Lite version to the full version of MMLU-Pro using test results obtained by Vijil Evaluate. The Lite version of MMLU-Pro reproduces the score of the full version with 95% accuracy at 95% cost savings, running 1000x faster on Vijil Evaluate than the full MMLU-Pro on the default evaluation harness.

This means that we can test GPT-4o with nearly the same accuracy (72.3, 72.4) at 5% of the cost ($2.3, $53) at 1293X the speed (87 seconds, 31.25 hours). The benefit would be similar for any model that you want to test with the same benchmark.

In the charts below, note that the costs and time are in the logarithmic scale.

Use Vijil Evaluate to run MMLU-Pro quickly and cheaply

Vijil Evaluate makes it easy to run MMLU-Pro — either the full or the Lite version — on any LLM to which you have access. Sign up at https://vijil.ai to use the minimalist UI and dashboard. Or just modify this Colab notebook with your provider and model.

You will need a Vijil API key to get started. To get one, send an email to contact@vijil.ai (tell them Aditya sent you).

——

Working at Vijil this summer, Aditya is a 5th year PhD student at the University of Illinois at Urbana Champaign. His work broadly looks at how individuals and groups can understand large algorithmic systems (e.g pricing systems, recommendation systems, LLM) and work together to achieve better outcomes in a fair sense. Prior to starting his PhD, he worked as a Quant at Goldman Sachs and holds a Master’s in Computational Science and Engineering from Harvard (working with Hima Lakkaraju) and a Bachelors of Science in Computer Science and Applied & Computational Mathematics from Caltech. You can learn more about him at https://adityakaran.github.io/.